OpenAI’s DALL-E is a simple approach based on Transformer that autoregressively transforms models the text & image tokens as single stream of date.

Autoregressive: predicts future behavior based on past behavior, used for forecasting when there is some correlation between values in time series.

Introduction:

-

History of text to image synthesis:

- Various approaches have tried to improve the visual fidelity, but there is still a problem of

- object distortion

- illogical funding object placement

- unnatural placement of foreground & background elements

- Eg: extending DRAW generative models, to condition on image captions to generate novel visual scenes, GANs for improved image fidelity, energy-based framework for conditional image generation to improve sample quality

- Various approaches have tried to improve the visual fidelity, but there is still a problem of

-

When compute, model size, and data are scaled carefully, auto regressive transformers achieve great results in several domains like text, images, audio.

-

This work demonstrates Training a 12-billion parameter autoregressive transformer on 250-million image-text pairs collected from internet results in flexible, high fidelity generative model of images controllable through natural language.

Zero-shot Learning: In this, at test time, learner observes samples from classes that were not observed during training & needs to predict the class they belong to. So prediction happens using auxiliary information and on the fly.

Method:

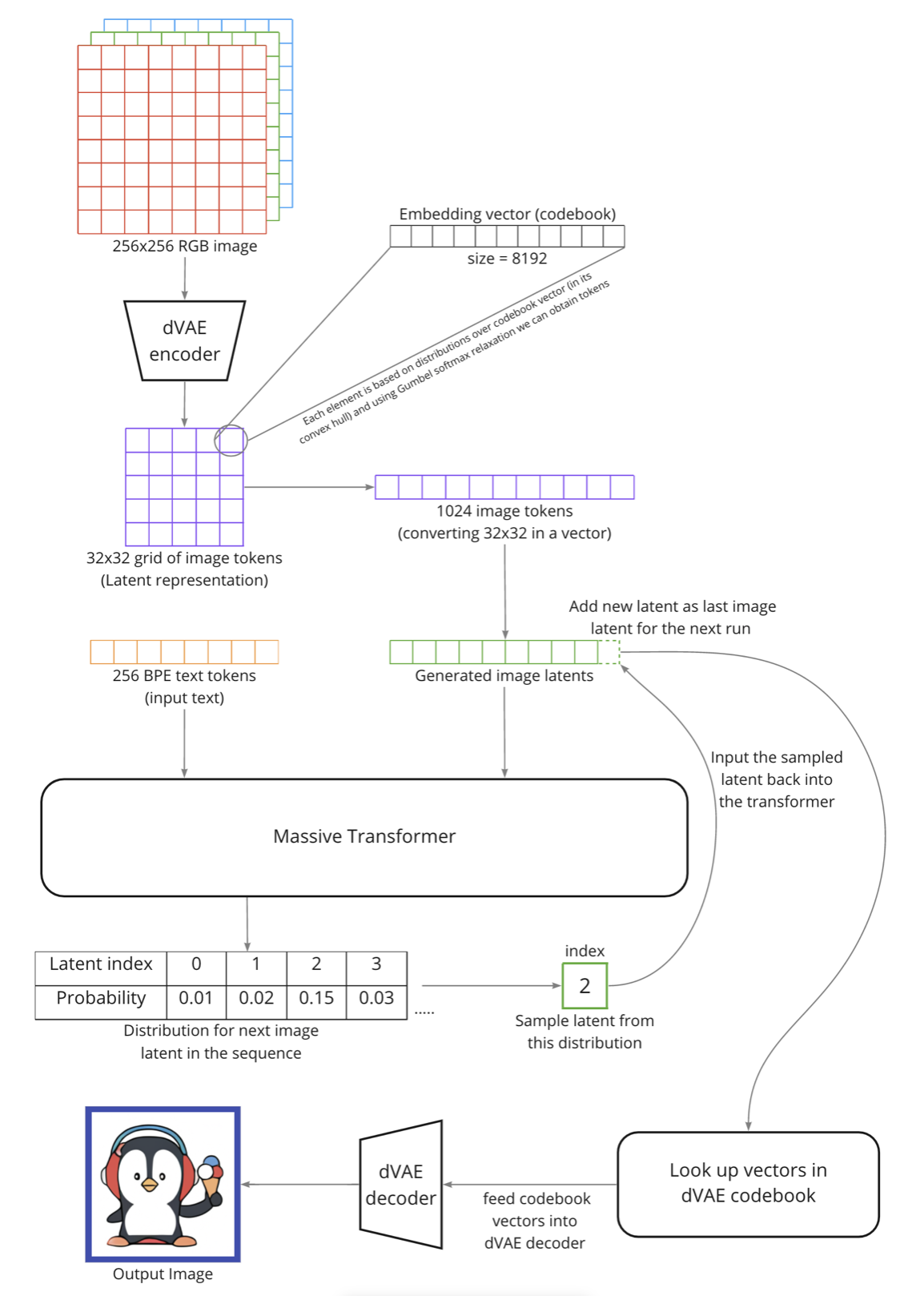

- To model a transformer to autoregressively model text and image token as single stream of data.

Note: directly using pixels of actual image proves computationally expensive & requires lot of memory.

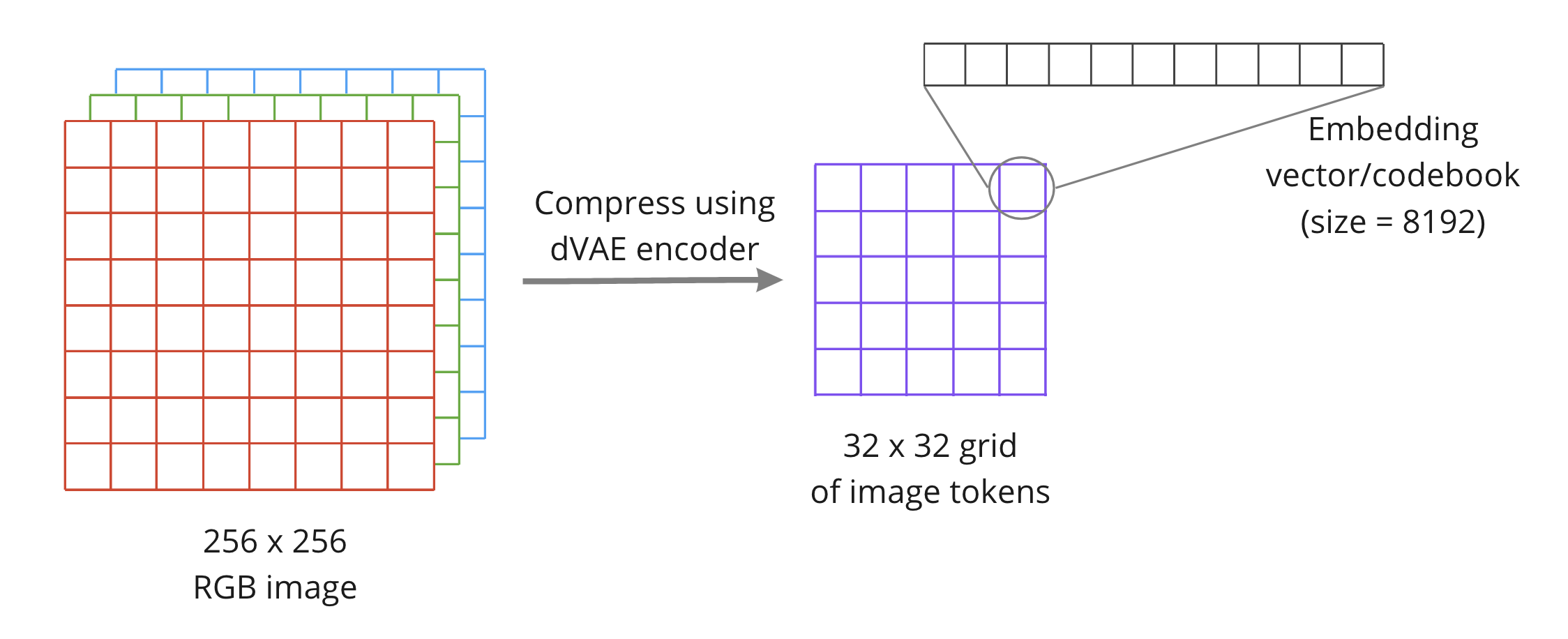

Stage 1:

- Train DiscreteVAE to compress 256x256 RGB image into 32x32 grid of image tokens, where each element can assume 8192 possible values.

- This reduces context size of transformer by a factor of 192 without significant degradation in visual quality.

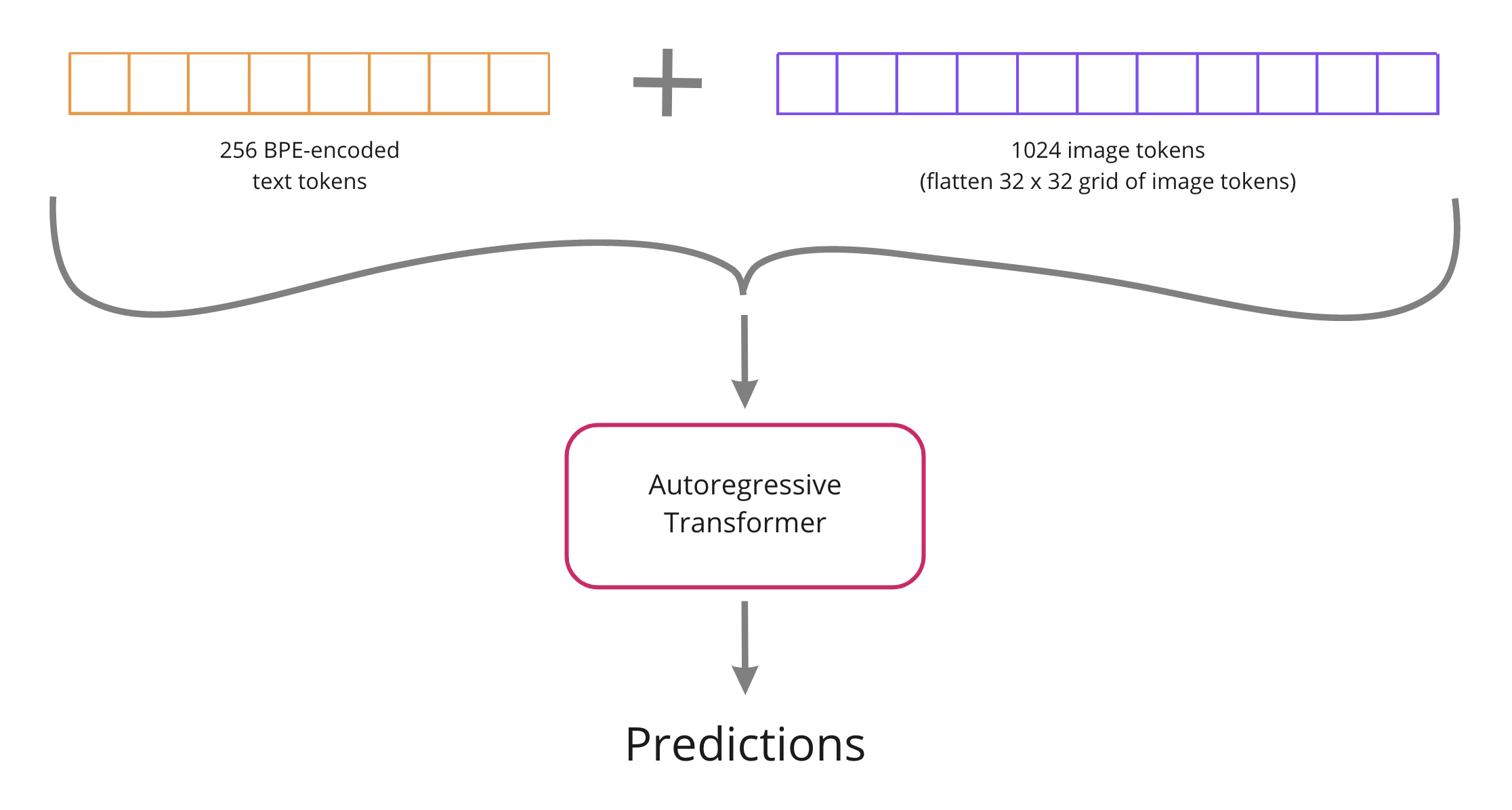

Stage 2:

- Concatenate upto 256 BPE-encoded text tokens with 32x32=1024 image tokens.

- Train autoregressive transformer to model joint distribution over the text and image tokens.

Complete DALL-E pipeline

Thanks for reading!

Got any questions or suggestions? Want to share any thoughts or ideas with me? Feel free to reach out to me on LinkedIn. Always happy to help!

Also, you can view by other works on GitHub and my blog.

Till then, see you in my next post!